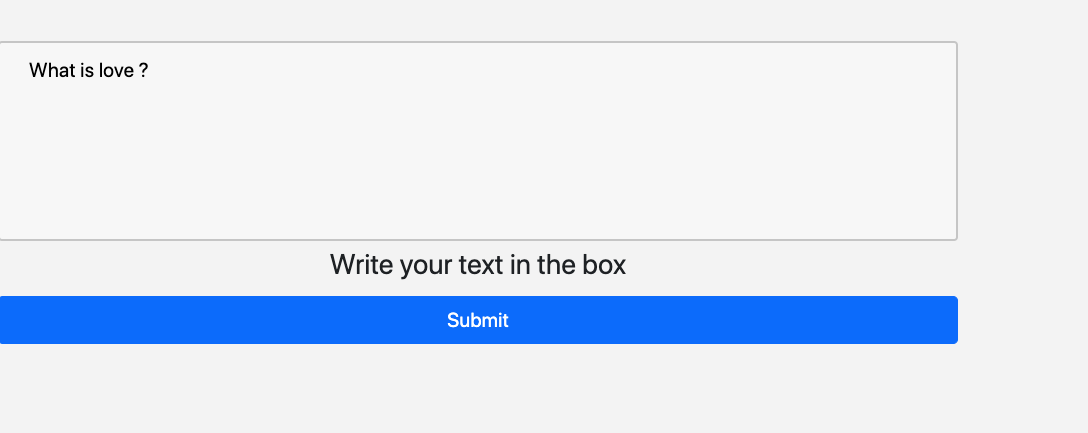

The blender-chat bot

Some test runs on the fly

This weekend I wanted to learn two things:

1) How to build Flask API for Machine Learning as a service

2) How to deploy this API on a remote server and get it running.

Here is the link to my bot deployed on heroku.

I would invite you to click on it and run with some examples of conversation starters which come to your mind. At the moment it is a toy proof of concept, with only a one time response. Possibly It is not very stable and it might crash and show an application error, in case there are multiple concurrent requests. Clearly Deploying an API on a server is not my forte hehe.

HuggingFace has an extensive list of pre-trained models, which you can download and start playing with. These “pre-trained” models might have been trained on millions of text corpus, and we get to build on top of them with an easy to use interface. Most of the times something like this:

First questions first : Why is this interesting ?

What these huge language models learn is an interesting question. Based on attention, they have the capacity to absorb all kinds of information on the textual data they have been given. Ideally we would want them to capture “the distribution” of words in a Human Language like English.

Scaling of dataset has provided un-precedented capacity for the model to generate seemingly human-like responses.

So far so good.

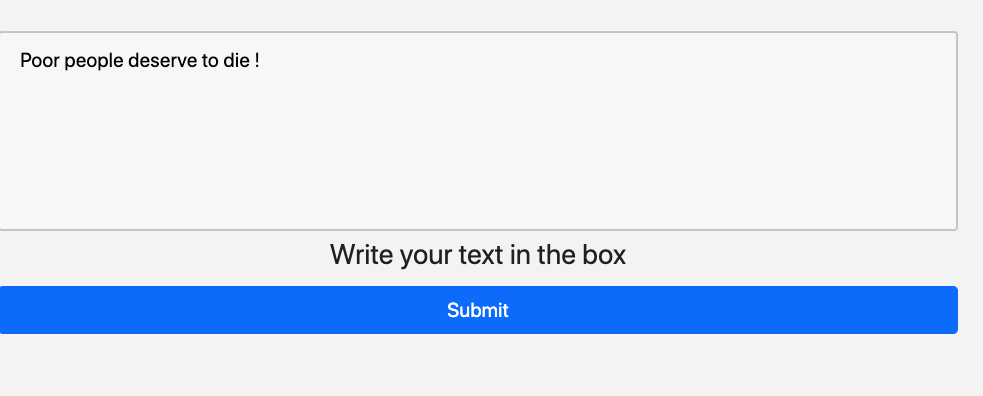

But what if we try to provoke this bot with some more controversial ideas? Here are some of the images, which I deliberately ran, to test the “ethics” of this model.

Getting started with using Flask

Here is my github_repository_link in case you would want to download your own model from huggingface library , and get started with your nlp projects.

The basic folder structure for the project is : templates with your html. And app.py constructs the API call to your prediction model. You can define your prediction model inside any other file, which are defined as “inference.py” and “commons.py”

Replace with your choice of prediction model in the below sections :

For file app.py :

For file Inference.py :

Deploying a basic style transfer app on heroku

Things I learnt the hard way A remote server may not be as resource rich as your own personal computer. For example transfomer models are large attention based models, which could be having millions of parameters. Given a choice of using a 2Billion parameter model , of very high capacity, the logical choice was to use the lower capacity model (90Million parameters) for ease of deployment.

Do these replies mean the model is artificially intelligent? I would not personally call it an artificially intelligent agent. When this bot replies in a certain way, it replies so because it has seen examples of such dialogs before.

The model could have just consumed all of internet and seen the common distribution of words in English language . Which basically means the model might be just regurgitating all examples of comments and articles , it has seen over the dataset.

References

- Credits to Avinash . The folder structure for flask is adapted from here avinassh/pytorch-flask-api-heroku

- Deploying the machine learning model in Heroku using Flask

- Recipes for building an open-domain chatbot Roller et al

- Blender bot on hugging face

- Ethical analysis of the open-sourcing of a state-of-the-art conversational AI