Natural Language Processing for low resource languages Part 1

Text must change to vectors

2. Code for finding most similar authors in the dataset

At the end of this blog, we would know how to model a simple content based recommendation system. We would be working towards a content based approach , which would develop a topic level understanding of the authors written work, in this example their ghazals/poems.

Hypothetically, suppose that you like ghazals written by “Mirza Ghalib” maybe because of his choice of topics and content. Based on our model, it seems, you would also like the works of Meer Taqi Meer and Firaq Gorakhpuri. *(hehe would need to validate this by actually asking some readers)

===============================

Author is ---- mirza-ghalib

Most similar authors are ----

meer-taqi-meer 0.89

firaq-gorakhpuri 0.86

noon-meem-rashid 0.73

bahadur-shah-zafar 0.60

===============================

*similarity values are in descending order, implying lesser similarity to the original author.

What is a low resource language ?

Many of the popular transformer based models use data available on internet, such as Wikipedia or reddit. While the English Wikipedia alone has 3.7 billion words , the same can not be said about all other human languages of the world. As more of the world chooses to publish and blog in English, there is less and less data output being produced in other languages.

Why is this interesting ? Urdu is a low resource language in NLP. Compared to English, which could have hundreds of thousands of articles floating around on the internet, there is not much content for Urdu, to train ML language models .

Ghazal is a form of poetry popular in South Asia. In terms of NLP, it provides interesting possiblities for future testing of language models. Wikipedia:

- The ghazal is a short poem consisting of rhyming couplets, called Sher or Bayt.

- Most ghazals have between seven and twelve shers. For a poem to be considered a true ghazal, it must have no fewer than five couplets.

- Almost all ghazals confine themselves to less than fifteen couplets (poems that exceed this length are more accurately considered as qasidas). Ghazal couplets end with the same rhyming pattern and are expected to have the same meter.

- The ghazal’s uniqueness arises from its rhyme and refrain rules, referred to as the ‘qaafiyaa’ and ‘radif’ respectively.

- Each sher is self-contained and independent from the others, containing the complete expression of an idea.

All data credits belong to the wonderful work done by Rekhta foundation

==============================================

Purpose of this blog:

1) Crawl a dataset for a low resource language 2) Try a toy nlp model for this data.

I want to highlight an important point at this momement. 4Mb of text data is nothing compared to what transformer based models actually need. 1300 text files in total.

common crawl dataset is a giant repository of free text data in more than 40 languages. If you actually want to train a transformer model from scratch, you would need data in order of millions of text files. And for that it would be best to start with one of these big data tools.

===============================================

Here is the same crawled dataset being put to use for finding out two most similar poets based on their written texts.

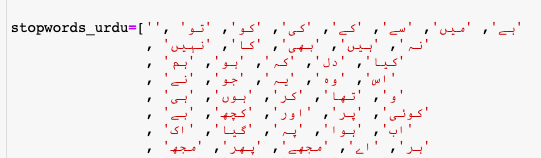

Stop words removal

Stopwords are words which occur with a very high frequency but usually don’t have a lot of meaning. Think articles ‘a’,’an’,’the’ in English, prepositions or repeatitive verbs like ‘is’,’are’.

================================

Sample of a tokenized document:

['سامنے', 'ستائش', 'چاہا', 'ہونٹوں', 'جنبش', 'اہل', 'محفل', 'کب', 'احوال', 'کھلا', 'اپنا', 'خاموش', 'پرسش', 'قدر', 'تعلق', 'چلا', 'جاتا', 'رنج', 'خواہش', 'کم', 'دونوں', 'بھرم', 'قائم', 'بخشش', 'گزارش', 'ادب', 'آداب', 'پیاسا', 'رکھا', 'محفل', 'صراحی', 'گردش', 'دکھ', 'اوڑھ', 'خلوت', 'پڑے', 'رہتے', 'بازار', 'زخموں', 'نمائش', 'ابر', 'کرم', 'ویرانۂ', 'جاں', 'دشت', 'بارش', 'کٹ', 'قبیلے', 'حفاظت', 'مقتل', 'شہر', 'ٹھہرے', 'جنبش', 'ہمیں', 'بھول', 'عجب', 'فرازؔ', 'میل', 'ملاقات', 'کوشش']

length of this document 60

================================

Total number of documents/ghazals 1314

number of token before stopwords removal 194282

after removal of stopwords 89404

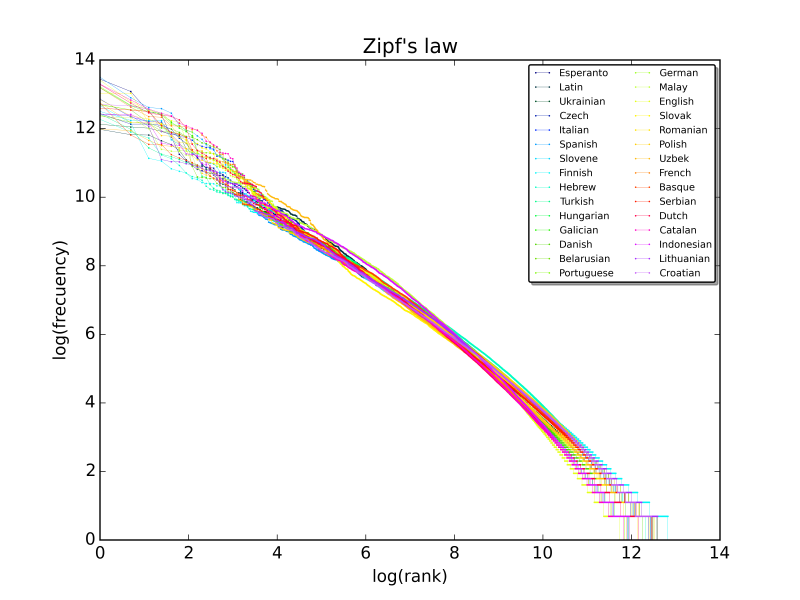

Stopwords interestingly follow zipf’s law, where going from the most common word (most frequent) to least common word, would follow an approximately exponential graph.

*image source wikipedia

========================

Token frequency distribution

========================

عشق 523

غم 467

سر 360

نظر 349

بات 311

سب 292

گل 291

یاد 269

گھر 256

رہ 248

Different topics here could possibly represent which words often occur together in a document/poem.We have trained our LDA model here for 6 topics.

topic 0::

ساتھ

دیکھتے

بات

=======================

topic 1::

جاتا

ملتا

ہوتے

=======================

topic 2::

ہوگا

آتے

عشق

=======================

topic 3::

خدا

غم

بات

=======================

topic 4::

غم

جاتے

یوں

=======================

topic 5::

عشق

گل

نظر

=======================

Building basic topic vector representations for each of the 30 authors

#Toy example

#Which two authors are most similar in terms of choice of topics or words used?

======================

sample document vector for an author

50

tensor([0.0000, 0.0000, 0.3098, 0.0000, 0.6847, 0.0000], dtype=torch.float64)

======================

Mean representation of all works of an author For now taking a mean representation for each author across his different works can be the most trivial approach, to get a single representation vector summarizing all their work. Of course this shall be an approximate representation

author1='mirza-ghalib'

vector1=author_mean_vectors[author1]

max_sim=0

most_sim=''

for author in author_mean_vectors:

if author!=author1:

sim=torch.dot(vector1,author_mean_vectors[author])

if sim>=max_sim:

max_sim=sim

most_sim=author

print(most_sim)

firaq-gorakhpuri

A more expanded list of similar authors for some author poets:

===============================

Author is ---- kaifi-azmi

Most similar authors are ----

ahmad-faraz 0.891351

waseem-barelvi 0.698940

javed-akhtar 0.694203

habib-jalib 0.635275

===============================

This ranking of similarity seems to make sense to me ,as Kaifi Azmi wrote songs for Bollywood movies and so did Javed Akhtar and Waseem Barelvi.

Author is ---- mirza-ghalib

Most similar authors are ----

meer-taqi-meer 0.897031

firaq-gorakhpuri 0.867845

noon-meem-rashid 0.732428

bahadur-shah-zafar 0.604054

===============================

Mirza Ghalib was a contemporary of bahadur shah zafar, hence it seems the nature of topics might have been similar. Also interestingly do these results say Ghalib was influenced a lot by the style of Meer Taqi Meer who came before him?

Author is ---- allama-iqbal

Most similar authors are ----

noon-meem-rashid 0.788498

nazm-tabatabai 0.781452

meer-anees 0.715229

meer-taqi-meer 0.623361

===============================================

Allama Iqbal wrote extensively on topics related to religion and self identity.

In my next blog I will try to build upon this crawled dataset. This is a sort of, kind of , mini finetuning dataset for a transformer model.

The text is available in English, Urdu and Hindi. So I could run and test the strength of this multi-lingual model in these 3 different ‘token’ approaches.