Using NLP for a hybrid recommendation system

Content or likes, what works better?

What is a recommendation system?

A recommendation system is designed to ease our exploration. The recommendation model should be able to suggest the user interesting items to consume, without the user having to invest a lot of time. Think your facebook feed or your youtube recommendations.

There can be different approaches to building a recommendation system. Either you can recommend a user what everyone else is already consuming (trending approach).

Or you can recommend items, which old users similar to this new user have already liked.(user-item approach; collaborative filtering).

Or recommeding items based on understanding the content. In this case the model knows the meaning behind the text or video, and it suggest them to the user, guessing that they would like them. Of course building a content level understanding of the item and user, would be a pre-requisite for this case.

Why is this interesting ?

So I have prepared my own dataset, and I thought it would be interesting to put it under the hammer for different kinds of applications. In this blog, I would try to demonstrate a basic end to end recommendation system put into practice .

The recommendation system here is kind of a ‘hybrid’ between a ‘content’ based approach , and ‘user-item’ likes approach. Basically , I have tried to combine both approaches, where we recommend items based on both the meaning of the content, and what other similar users have already liked in the past.

What kind of data are we working with here?

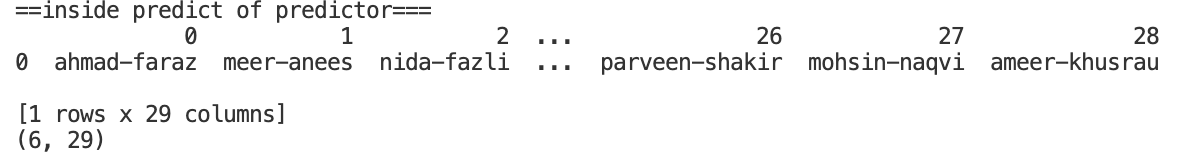

We begin with a dataset of text work (ghazals) written by different Urdu poets taken from Rekhta ; see this . For ease of use, I am currently restricting to 29 authors.

Most of the times, our work in ML is to make a vector representation for things we want to work with. Something as simple as a letter ‘A’ or word ‘Apple’ or digits ‘1’ , ‘42’ would have to be converted to a vector representation first, in order for our ML models to understand and process them.

Fortunately we already have vector representations for each of the authors in this dataset. We developed this representation, in this previous blog.

Example vector representation of content (for each of the authors as 5 dimensions here):

author 1 2 3 4 5 \

0 ahmad-faraz 0.219659 0.142931 0.083009 0.105431 0.396997

1 meer-anees 0.028339 0.191117 0.115029 0.594038 0.037311

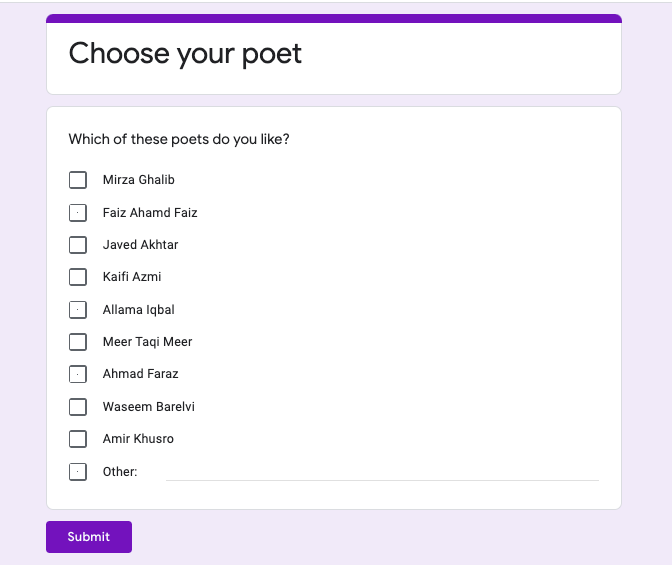

For user-item ratings a simple google form survey would be great to collect data about what different users like or dislike.

The key assumption behind a user-item approach, also called ‘collaborative filtering approach’, is that users with similar taste, would rate similar items in a similar fashion. There would be a consistent pattern behind their likes and dislikes (hopefully, otherwise all of this goes kaput).

Here’s a sample of this survey form:

Example user-item ratings, on different authors :

0 ahmad-faraz meer-anees nida-fazli akbar-allahabadi jigar-moradabadi \

1 3 1 3 0 0

2 2 3 0 2 3

3 0 0 3 1 0

Let’s say we have collected 10 people’s opinions (too less,I know) on this data. Using this like and dislike data , where non selection is implicitly assumed as dislike, we make the first user-item matrix.

We call it the first user-item matrix, because the model should be able to eventually do re-trainings in future whenever more data is collected. Cool thing is , that with more time, you will always get more new users, and more data.

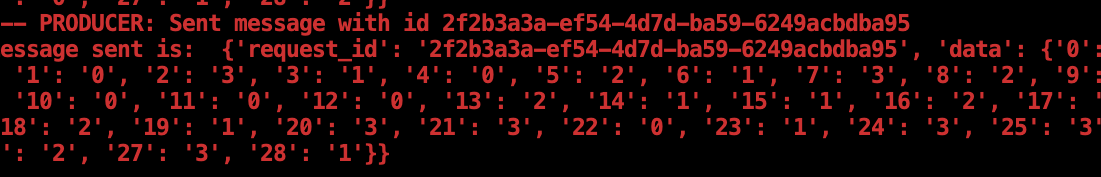

But the question is how to trigger updates when enough new users are collected ? Kafka comes into the picture…

Kafka pipeline for triggering model updates

A kafka pipeline would be a great tool here. We understand that there are two parts of the current model.

- The content based representations of all authors

- The user item matrix. Basically the likes and dislikes of users on differnt authors.

For a real world application, data will keep coming , whenever more users come use your platform. Model updates would enable you to better serve your customer, as user interests and trending topics can/may change over time. We could start with an inital model, using the limited intial user data, and then trigger an update via kafka. In the beginning we would expect the ‘content’ based model to do more heavy lifting for us.

Two things which I had to understand was , what is a ‘kafka producer’, and what is a ‘kafka consumer’. In this scenario, the Kafka producer would send the data , and be collected (say stored in a db or file).The model retrain would be triggered using a kafka consumer,each time we have collected observation data from say 50 new users. This new data can be appended to the old user-item matrix data, and a new training loop can be run.

This new data would be used to calculate the user - item based collaborative filtering matrix.(* these complete re-runs would be expensive, and of course real world approaches for big organizations might differ)

If you want to find more details on this, there is this wonderful blog by Javier Rodriguez Zaurin, which is a good point to see this in action This kafka pipleline outsources our tension away for how and when to trigger the model updates.

What is the formula at work?

The basic assumption for recommendation, is that we have already figured out the user and item. And by figure out I mean there is a factor P , and there is a factor Q to model a like or dislike.

A like is basically a ‘score’,from a user u for an item i.

$ score_{u,i}= P_u . Q_i $

P could be modelling our Users, with dimension as: (number users x latent dim)

Q could be modelling all Items, with dimension as: (number items x latent dim)

The choice of latent dim itself is a hyperparameter.But the simple hybrid approach which we chose here, intialized the matrix Q with the content representation values. Hence we would chose the latent dimension size to be 6, which is same as the original content vector representation dimension. The correct model scores are eventually learnt based on a gradient descent update training rule.

Once the matrix $’P’$ and $’Q’$ have been correctly learnt, any new recommendation is just a calculation of a ‘score’, as the dot product of (P,Q). If the score is high for a given user item pair recommend this item to this user. If the score is low, then don’t recommend this item to this user.

Good thing about using kafka can be, that you could collect data from different pipelines, google forms or a quiz on facebook, and aggregating them is not a problem. The nature/format of final data would remain the same. So model training and model prediction code can remain agnostic of these.

“Simply, a producer will publish messages into the pipeline ( start_producing()) And will consume messages with the final prediction ( start_consuming()). “ (reference 1)

What to do for the cold start problem?

Cold start is the situation where you don’t have a model for your new user at the beginning. You don’t know what this person likes, and what would be a good recommendation for him. There can be some heuristic workarounds to this problem. Lamest approach would be to ask this user 2 ,3 questions, get some idea of their interest, find a user most similar to them already in our dataset. And then recommend items to him. Of course, eventually we would know from this users engagement or feedback whether they liked the lame approach or not.

Lame approach in spotlight:

End result

So what would this model recommend to a new user who said he likes authors, indexed 2,8 and 12 in our dataset :

[nida-fazli [2] , naseer-turabi [8] , firaq-gorakhpuri [12] ]

Recommendations:

['sahir-ludhianvi', 'allama-iqbal', 'nida-fazli']

Funny enough the third author is the original author itself.

But we could improve more on this model with better data. :) That’s all for now.

References

- Javier Rodriguez Zaurin https://github.com/amir9ume/ml-pipeline

- Tutorial on how to use google forms to ingest data to pandas (https://pbpython.com/pandas-google-forms-part1.html)

- This blog was good for understanding SVD decomposition and its interpretation for recommendation. Nicholas Hug

- code for sgd training of CF taken from this colab notebook. authors: Dora Jambor, David Berger, Laurent Charlin.